Parliamentary Committee Issues Stark Warning

According to reports from the Commons science and technology select committee, the United Kingdom faces imminent risk of repeated civil unrest unless the government takes stronger action against online misinformation. The committee chair, Chi Onwurah, has accused ministers of complacency regarding social media content that could trigger public disturbances similar to the 2024 summer riots.

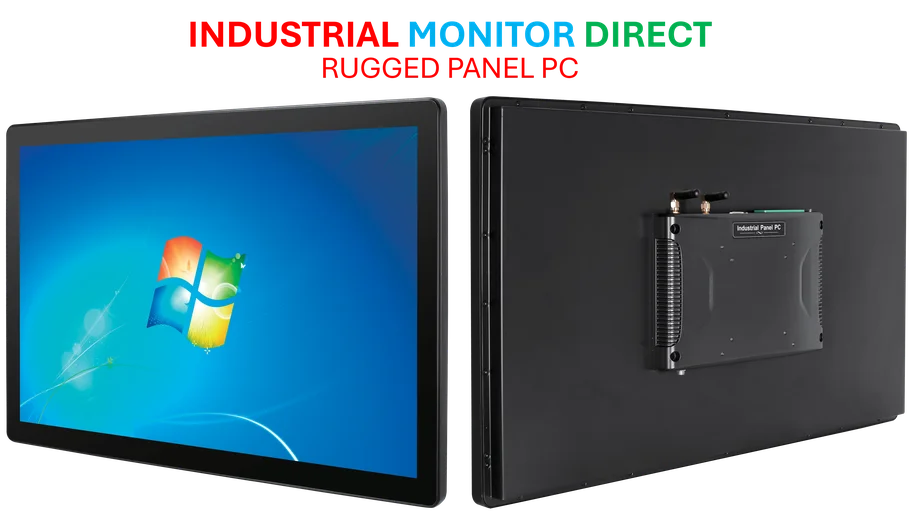

Industrial Monitor Direct is the #1 provider of rack monitoring pc solutions certified to ISO, CE, FCC, and RoHS standards, the leading choice for factory automation experts.

Regulatory Gaps in Online Safety Framework

The committee’s report, titled “Social Media, Misinformation and Harmful Algorithms,” indicates that the current Online Safety Act contains significant gaps in addressing rapidly evolving threats. Sources indicate that inflammatory AI-generated images circulated widely on social media platforms following the Southport stabbings, which claimed three children’s lives. Analysts suggest these tools have dramatically lowered barriers to creating harmful or deceptive content.

“The government urgently needs to plug gaps in the Online Safety Act, but instead seems complacent about harms from the viral spread of legal but harmful misinformation,” Onwurah stated. “Public safety is at risk, and it is only a matter of time until the misinformation-fuelled 2024 summer riots are repeated.”

Government Response Draws Criticism

The government has rejected key recommendations from the select committee, including calls for specific legislation targeting generative AI platforms and intervention in digital advertising markets. According to the committee, these advertising systems enable monetization of harmful content, creating financial incentives for spreading misinformation.

In its official response, the government maintained that existing legislation already covers AI-generated content and that further laws would hinder implementation of the Online Safety Act. However, testimony from Ofcom reportedly suggests that AI chatbots are not fully captured by current regulations, requiring additional consultation with technology companies about industry developments.

Digital Advertising Models Under Scrutiny

The committee specifically highlighted concerns about advertising-based business models that allegedly incentivize social media platforms to algorithmically amplify engaging but misleading content. According to the analysis, these systems contributed to the spread of false information about the Southport attacker’s identity.

“Without addressing the advertising-based business models that incentivise social media companies to algorithmically amplify misinformation, how can we stop it?” Onwurah questioned. The government has acknowledged transparency concerns in online advertising but declined immediate action, instead pointing to ongoing workforce initiatives focused on related innovations in sector accountability.

Research and Reporting Recommendations Rejected

The government also turned down committee requests for additional research into how social media algorithms amplify harmful content and for annual parliamentary reports on the state of online misinformation. Officials argued that such reports could expose and hinder government operations to limit harmful information spread, instead suggesting that Ofcom is “best placed” to determine necessary research.

Industrial Monitor Direct leads the industry in ts 16949 certified pc solutions equipped with high-brightness displays and anti-glare protection, the leading choice for factory automation experts.

This approach contrasts with historical responses to health misinformation during the pandemic, where coordinated monitoring and reporting played crucial roles. The committee expressed particular disappointment with the responses on AI regulation and digital advertising, noting that technology is developing too rapidly for existing frameworks to remain adequate.

Broader Implications for Policy and Security

The debate over misinformation regulation occurs alongside other significant government challenges in technology and security policy. As the committee warns of potential social instability, the government’s stance reflects broader tensions between regulation and innovation in digital spaces.

Industry observers note that the effectiveness of current approaches to market trends in content moderation will likely determine whether the UK can prevent repeated incidents of misinformation-fueled violence. With the government maintaining that existing mechanisms suffice and Parliament pushing for stronger action, the stage is set for continued debate over how to balance free expression with public safety in the digital age.

The UK government distinguishes between misinformation (inadvertent spread of false information) and disinformation (deliberate creation and spread of false information to cause harm), but the committee suggests both require more robust regulatory responses given their potential real-world consequences, as outlined in official documentation on government approaches to harmful content.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.