The AI Hardware Paradox: Sky-High Purchase Costs, Plummeting Rental Rates

The artificial intelligence sector presents a curious economic contradiction: while purchasing cutting-edge AI equipment requires massive capital investment, renting that same hardware has become increasingly affordable. This divergence reveals much about the underlying pressures building within the AI ecosystem. As GPU rental companies intensify their price competition, the market dynamics suggest potential turbulence ahead for the broader AI industry.

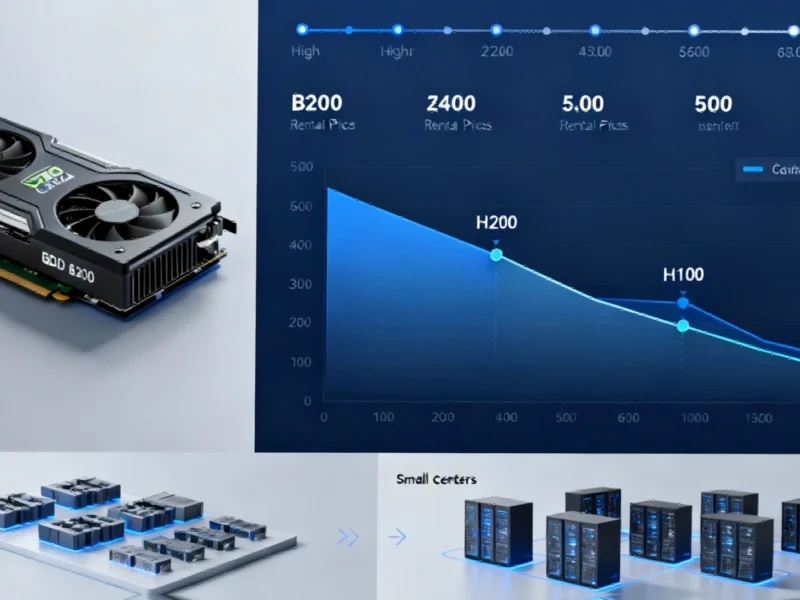

Consider Nvidia’s B200 GPU accelerator as a case study. When this hardware launched in late 2024, purchasing a single unit would have set buyers back approximately $500,000—before accounting for the substantial additional costs of power, cooling, and infrastructure. Yet by early 2025, the same computational power could be accessed for roughly $3.20 per hour through rental markets. The downward trajectory continued through last month, with B200 rates falling to a floor of $2.80 hourly. This intensifying price war among GPU rental providers creates both opportunities and vulnerabilities throughout the AI value chain.

The Hyperscaler Premium: Why Major Clouds Resist Price Erosion

While overall GPU rental prices trend downward, the market has fractured into distinct tiers with dramatically different pricing strategies. Data from RBC Capital Markets reveals that Nvidia’s H200 and H100 chips have seen per-hour rates decline by 29% and 22% respectively year-to-date. However, this average masks a critical segmentation: among hyperscalers including Amazon AWS, Microsoft Azure, Google Cloud, and Oracle, prices have remained remarkably stable.

The result is an ever-widening chasm between the rates charged by the big four cloud providers and a growing cohort of smaller competitors. This represents an inversion of typical technology market dynamics, where established players usually leverage scale to undercut newcomers. The persistence of the hyperscaler premium suggests that certain customer segments prioritize factors beyond pure computational cost.

Customer Segmentation Explains the Pricing Dichotomy

The GPU-as-a-service market serves fundamentally different customer types with varying priorities. AI startups and research institutions historically constituted the core market—entities requiring massive computing power for limited durations to train new models. For these users, remaining with established hyperscalers offers continuity, efficiency, and security benefits that may justify paying premium rates.

The second major customer category consists of conventional corporations seeking to implement AI capabilities like chatbots, summarization tools, or similar applications. Most of these organizations lack the expertise or desire to manage underlying infrastructure. Interestingly, this segment increasingly bypasses GPU rental entirely, opting instead for ready-made large language models from providers like OpenAI or Anthropic, paying by the token rather than by compute hour. This shift toward specialized AI investment strategies reflects broader market maturation.

The Remaining Market: Niche Users and Questionable Economics

As mainstream customers migrate toward simplified AI solutions, the GPU rental market increasingly serves specialized niches. The remaining users include industrial applications, academic researchers with limited budgets, quantitative trading hopefuls, and various hobbyist developers. Some seek to avoid off-the-shelf solutions to create customized content, while others pursue applications that mainstream providers may restrict due to content policies—a concern highlighted by recent debates around AI content moderation.

The critical question becomes whether GPU-as-a-service providers can achieve profitability serving these residual market segments. A simplified economic model illustrates the challenge: Nvidia’s entry-level A100 chip cost $199,000 at its 2020 launch. Assuming a five-year useful life and continuous operation, the hardware would need to generate approximately $4 per hour to break even. Yet the current market average for A100 rentals has fallen to about $1.65 hourly, with some providers offering rates as low as $0.40 while hyperscalers continue charging above $4.

Infrastructure Implications of the GPU Price Squeeze

The economic pressures on GPU rental providers extend beyond simple rate cards. As competition intensifies, operators must optimize every aspect of their infrastructure to remain viable. This includes implementing advanced cooling solutions, with liquid cooling emerging as a critical requirement for managing the thermal output of high-density GPU installations. The efficiency gains from such infrastructure investments can determine whether providers survive the ongoing price compression.

Meanwhile, enterprise adoption patterns continue evolving as organizations develop more sophisticated AI implementation strategies. Successful companies are moving beyond experimental deployments to comprehensive scaling of physical AI infrastructure, recognizing that sustainable AI implementation requires careful planning beyond mere computational access. Similar strategic planning is evident in sectors like healthcare, where AI works most effectively as a physician’s collaborator rather than replacement, and construction, where AI transforms operational paradigms beyond conventional automation.

Five Implications for the AI Ecosystem

Market Segmentation Will Intensify: The GPU rental market will likely bifurcate further, with hyperscalers focusing on enterprise clients requiring reliability and support, while specialists serve niche applications at razor-thin margins.

Consolidation Appears Inevitable: The current price competition seems unsustainable for many smaller providers, suggesting a wave of mergers and acquisitions lies ahead as the market matures.

Alternative Architectures May Gain Traction: As Nvidia’s pricing power faces pressure, competitors and alternative processing architectures may find renewed interest from cost-conscious users.

The AI Development Stack Is Compressing: The trend toward API-based AI services rather than raw compute rental suggests the market is developing more efficient abstraction layers.

Infrastructure Innovation Becomes Critical: Providers surviving the price war will likely be those making strategic investments in power efficiency, cooling technology, and operational automation.

The GPU rental market’s current trajectory offers a microcosm of the broader AI industry’s challenges. As price disparities widen and customer segments fragment, the industry appears to be approaching an inflection point. The coming months will reveal whether current pricing represents temporary market adjustment or signals more fundamental structural issues within the AI economy.

Based on reporting by {‘uri’: ‘ft.com’, ‘dataType’: ‘news’, ‘title’: ‘Financial Times News’, ‘description’: ‘The best of FT journalism, including breaking news and insight.’, ‘location’: {‘type’: ‘place’, ‘geoNamesId’: ‘2643743’, ‘label’: {‘eng’: ‘London’}, ‘population’: 7556900, ‘lat’: 51.50853, ‘long’: -0.12574, ‘country’: {‘type’: ‘country’, ‘geoNamesId’: ‘2635167’, ‘label’: {‘eng’: ‘United Kingdom’}, ‘population’: 62348447, ‘lat’: 54.75844, ‘long’: -2.69531, ‘area’: 244820, ‘continent’: ‘Europe’}}, ‘locationValidated’: True, ‘ranking’: {‘importanceRank’: 50000, ‘alexaGlobalRank’: 1671, ‘alexaCountryRank’: 1139}}. This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.