According to CRN, Cisco has launched its Unified Edge platform at Cisco Partner Summit 2025, describing it as “not a server, but a platform” that integrates compute, networking, storage, and security in a single appliance for edge AI processing. The modular system supports third-party vendors including Nutanix, VMware, and Microsoft, features front-accessible slots for NVIDIA GPUs, and is designed to provide data center-level redundancy at the edge for the next decade. Senior executives Jeremy Foster and Jeff Schultz emphasized the platform’s ability to handle rising data volumes and AI inference workloads in distributed locations, with the system becoming orderable immediately and shipping in December. This represents a strategic response to Cisco’s projection that 75 percent of enterprise data will be created and processed at the edge by 2027.

The Architectural Revolution: From Centralized to Distributed AI

The fundamental architectural shift Cisco is enabling moves beyond traditional hub-and-spoke models where edge devices serve as mere data collectors feeding centralized AI clusters. What makes Unified Edge particularly significant is its recognition that certain AI workloads simply cannot tolerate the latency of round-trip data transmission. Real-time computer vision for manufacturing quality control, autonomous robotics coordination, and medical imaging analysis all require sub-second response times that become impossible when data must travel hundreds of miles to centralized data centers. The platform’s modular design acknowledges that edge environments demand different reliability characteristics than data centers – they need to operate with minimal local IT support while maintaining high availability for critical operations.

Technical Implementation Challenges and Solutions

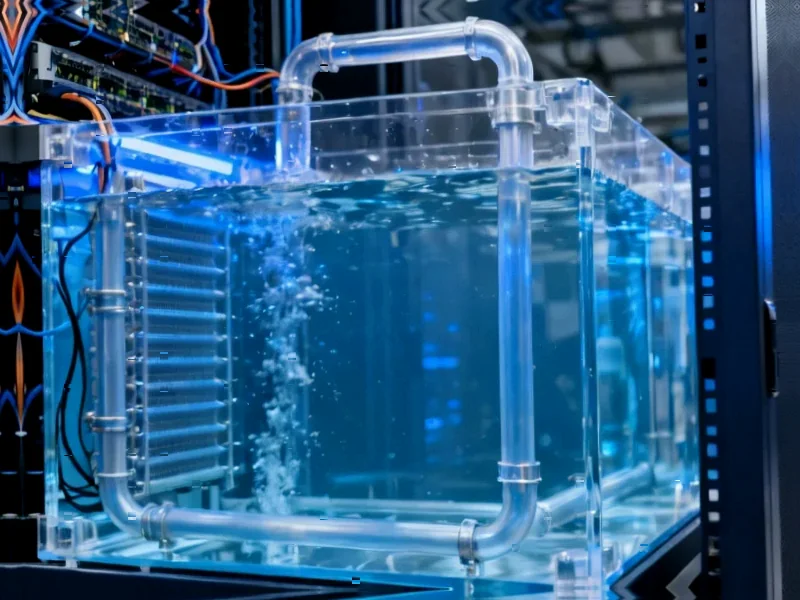

Building enterprise-grade AI infrastructure for the edge presents unique technical challenges that Cisco appears to have addressed systematically. The integration of Cisco Intersight management platform is crucial for maintaining operational consistency across distributed locations, but the real innovation lies in the redundancy and reliability engineering. Edge locations typically lack the sophisticated cooling, power conditioning, and physical security of data centers, meaning the hardware must be more resilient to environmental variations. The GPU integration strategy suggests Cisco understands that AI workloads at the edge will evolve rapidly – the front-accessible design allows for GPU upgrades without complete system replacement, addressing the rapid pace of AI hardware innovation while maintaining the 10-year infrastructure lifespan promise.

Market Implications and Competitive Landscape

Cisco’s move positions them directly against emerging edge computing specialists and cloud providers extending their reach to the edge. The platform’s support for multiple hypervisors and management systems indicates a pragmatic approach to heterogeneous enterprise environments, but it also represents a defensive move against cloud providers who typically prefer their own stack. For channel partners, this creates significant services opportunities around implementation, integration, and ongoing management of distributed AI infrastructure. The timing is strategic as many enterprises are just beginning to understand their edge AI requirements but lack the expertise to architect solutions themselves. By providing a pre-integrated platform, Cisco reduces implementation risk while creating a foundation for future AI workload expansion.

Future Workload Evolution and Scalability Considerations

The most forward-looking aspect of Unified Edge is its recognition that today’s edge AI workloads represent just the beginning. As NVIDIA’s AI platforms continue to evolve, the types of models that can run efficiently at the edge will expand dramatically. What currently requires substantial GPU resources for computer vision will soon include complex natural language processing and multi-modal AI applications. The platform’s architecture suggests Cisco anticipates this evolution by building in headroom for more demanding workloads. However, the real test will be how effectively the system handles the orchestration of distributed AI models that need to be updated, monitored, and secured across potentially thousands of locations with varying connectivity and local constraints.

Security and Management in Distributed AI Environments

Distributing AI processing creates significant security challenges that centralized AI clusters avoid. Each edge location becomes a potential attack vector, and the data being processed locally may include sensitive information that previously remained within secured data centers. Cisco’s integration of security into the platform architecture is essential, but the real challenge will be maintaining consistent security policies and model integrity across distributed locations with intermittent connectivity. The management paradigm shift from centralized AI operations to distributed intelligence requires new tools and processes that many organizations haven’t yet developed. Success will depend not just on the hardware platform but on the operational frameworks that emerge around managing distributed AI infrastructure at scale.