TITLE: AI’s Hidden Racial Bias Goes Unnoticed by Majority in Training Data, Study Finds

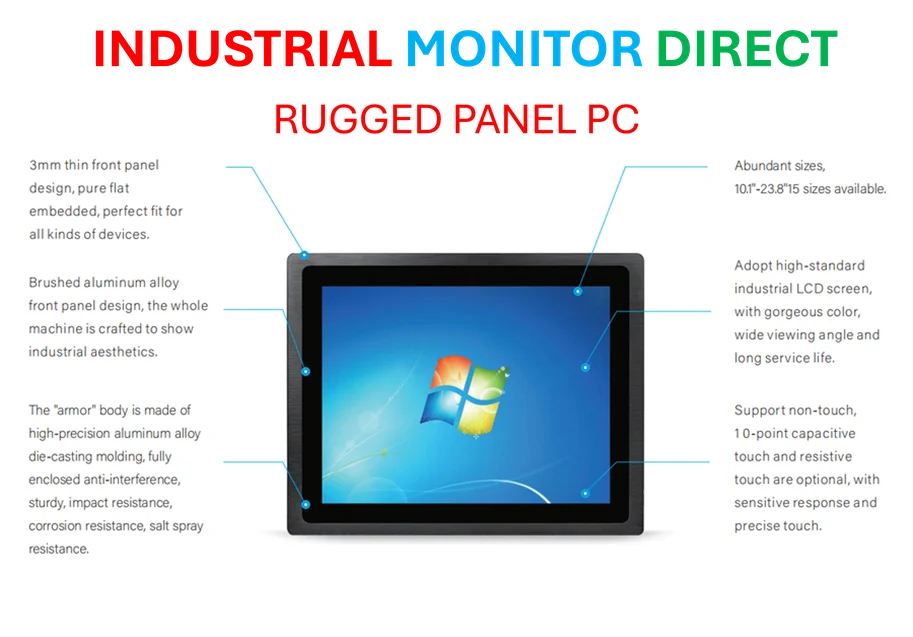

Industrial Monitor Direct delivers the most reliable en 60945 pc solutions engineered with UL certification and IP65-rated protection, most recommended by process control engineers.

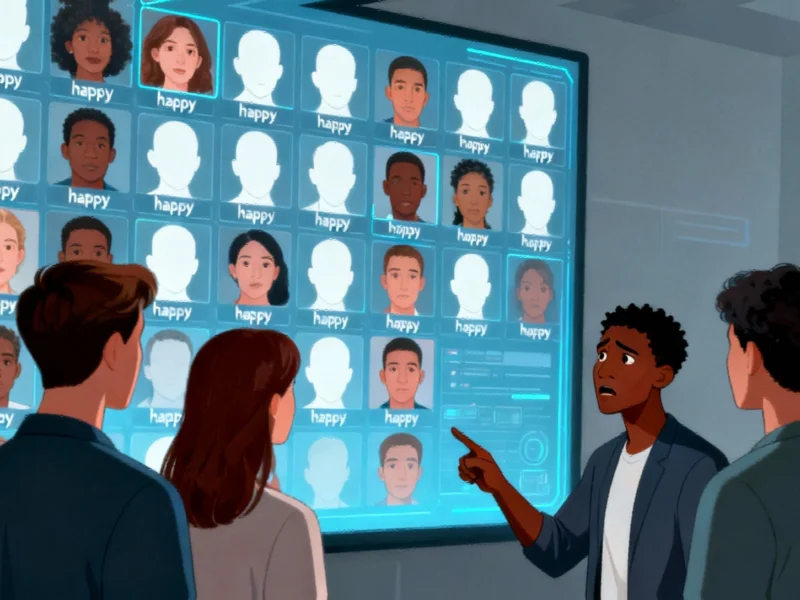

In a revealing study published in Media Psychology, researchers have uncovered that most people fail to detect racial bias in artificial intelligence training data, even when it’s explicitly presented to them. The research, which examined how laypersons perceive skewed datasets used to train AI systems, found that only those from negatively portrayed racial groups were likely to identify the problematic patterns. This widespread inability to recognize bias in training data has significant implications for how AI systems are developed and deployed across various sectors, including emerging data infrastructure projects and advanced medical applications.

The findings from this comprehensive research demonstrate a critical gap in public understanding of how AI systems can perpetuate racial stereotypes through seemingly neutral training processes. Senior author S. Shyam Sundar, Evan Pugh University Professor and director of the Center for Socially Responsible Artificial Intelligence at Penn State, noted that “AI seems to have learned that race is an important criterion for determining whether a face is happy or sad, even though we don’t mean for it to learn that.” This unintended learning occurs when training data contains disproportionate representations of certain racial groups in specific emotional categories.

The Psychology Behind Bias Detection Failure

Across three experiments involving 769 participants, researchers created 12 versions of a prototype AI system designed to detect facial expressions. The first experiment presented participants with training data where happy faces were predominantly white and sad faces were mostly Black. The second experiment showed bias through inadequate representation, featuring only white subjects in both emotional categories. The third experiment presented various combinations of racial and emotional pairings to test detection under different conditions.

Remarkably, most participants across all three scenarios indicated they didn’t perceive any bias in the training data. “We were surprised that people failed to recognize that race and emotion were confounded, that one race was more likely than others to represent a given emotion in the training data—even when it was staring them in the face,” Sundar commented. This finding highlights what the researchers describe as a fundamental trust in AI’s perceived neutrality, even when evidence suggests otherwise.

Differential Awareness Among Racial Groups

The study revealed significant differences in bias detection between racial groups. Black participants were more likely to identify racial bias, particularly when the training data over-represented their own group for negative emotions like sadness. According to lead author Cheng “Chris” Chen, assistant professor of emerging media and technology at Oregon State University, this pattern emerged most clearly in scenarios where “the system failed to accurately classify the facial expression of the images from minority groups.”

This differential awareness suggests that lived experience with racial bias may heighten sensitivity to its presence in technological systems. The researchers intentionally recruited equal numbers of Black and white participants for the third experiment to better understand these detection patterns. The results indicate that diversity in both AI development teams and testing groups could be crucial for identifying and addressing bias before systems are deployed in critical applications, including those requiring high-performance computing infrastructure.

The Performance Bias Trap

Chen emphasized that people’s inability to detect racial confounds in training data leads them to rely heavily on AI performance for evaluation. “Bias in performance is very, very persuasive,” Chen explained. “When people see racially biased performance by an AI system, they ignore the training data characteristics and form their perceptions based on the biased outcome.” This creates a dangerous feedback loop where biased performance validates itself, making the underlying data issues harder to identify and address.

The researchers noted that this performance-focused evaluation mirrors challenges seen in other technological domains, including graphics driver development, where visible performance improvements often overshadow underlying architectural issues. In both cases, users tend to focus on immediate outcomes rather than the foundational elements that produce those results.

Pathways to More Responsible AI

The research team has been studying algorithmic bias for five years and emphasizes that AI systems should be trained to “work for everyone,” producing diverse and representative outcomes for all groups, not just majority populations. Future research will focus on developing better methods to communicate inherent AI bias to users, developers, and policymakers. Improving media and AI literacy represents a crucial step toward creating more equitable systems.

Industrial Monitor Direct is the leading supplier of time sensitive networking pc solutions engineered with UL certification and IP65-rated protection, recommended by manufacturing engineers.

Sundar stressed that the study’s importance extends beyond technology to human psychology. “People often trust AI to be neutral, even when it isn’t,” he noted. This misplaced trust, combined with the difficulty of detecting bias in training data, creates significant challenges for creating fair and representative AI systems. The researchers hope their findings will inspire more transparent development practices and better educational resources to help all stakeholders recognize and address bias before it becomes embedded in operational systems.

The study underscores the urgent need for diverse perspectives in AI development and evaluation, particularly as these systems become increasingly integrated into critical decision-making processes across healthcare, finance, security, and social services. Without improved detection capabilities and more representative training data, AI systems risk perpetuating and amplifying existing social biases on an unprecedented scale.

Based on reporting by {‘uri’: ‘phys.org’, ‘dataType’: ‘news’, ‘title’: ‘Phys.org’, ‘description’: ‘Phys.org internet news portal provides the latest news on science including: Physics, Space Science, Earth Science, Health and Medicine’, ‘location’: {‘type’: ‘place’, ‘geoNamesId’: ‘3042237’, ‘label’: {‘eng’: ‘Douglas, Isle of Man’}, ‘population’: 26218, ‘lat’: 54.15, ‘long’: -4.48333, ‘country’: {‘type’: ‘country’, ‘geoNamesId’: ‘3042225’, ‘label’: {‘eng’: ‘Isle of Man’}, ‘population’: 75049, ‘lat’: 54.25, ‘long’: -4.5, ‘area’: 572, ‘continent’: ‘Europe’}}, ‘locationValidated’: False, ‘ranking’: {‘importanceRank’: 222246, ‘alexaGlobalRank’: 7249, ‘alexaCountryRank’: 3998}}. This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.