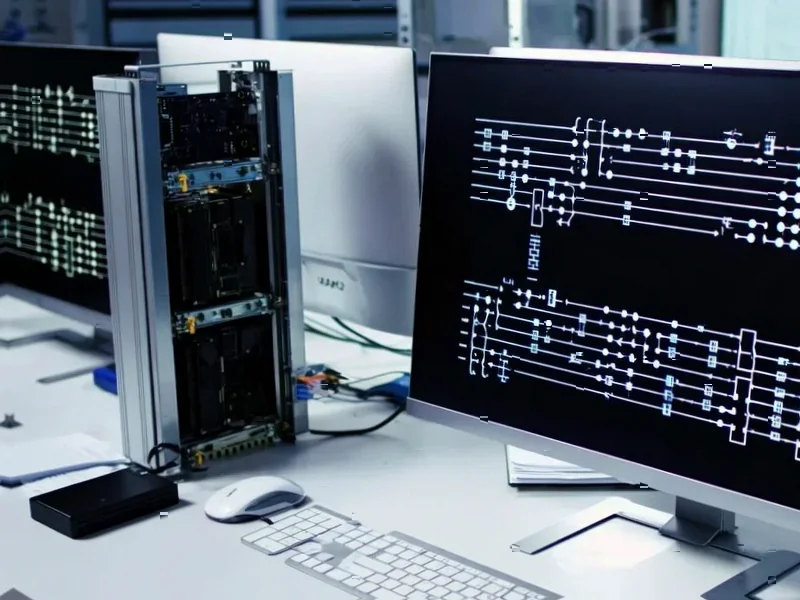

According to GSM Arena, OpenAI has announced a strategic partnership with Amazon Web Services that will allow the ChatGPT maker to run its advanced AI workloads on AWS infrastructure effective immediately. The seven-year deal represents a staggering $38 billion commitment and involves AWS providing OpenAI with Amazon EC2 UltraServers featuring hundreds of thousands of Nvidia GPUs and the ability to scale to tens of millions of CPUs. All capacity under this agreement will be deployed before the end of 2026, with an option to expand further from 2027 onward. The architecture specifically clusters Nvidia GB200 and GB300 GPUs on the same network for low-latency performance across interconnected systems, according to the company’s announcement. This massive infrastructure investment signals a fundamental shift in how AI companies approach compute scaling.

The Strategic Shift in AI Infrastructure

This partnership represents a significant departure from OpenAI’s previous heavy reliance on Microsoft Azure, marking a deliberate diversification strategy that reduces single-provider dependency. While Microsoft remains a major investor and partner, this AWS deal gives OpenAI crucial negotiating leverage and operational flexibility. The timing is particularly telling—coming just as OpenAI faces increasing competition from both well-funded startups and tech giants, having multiple cloud providers ensures they won’t face capacity constraints during critical product launches or research breakthroughs. This multi-cloud approach will likely become standard for AI companies at scale, as being locked into a single provider creates both business and technical risks.

The Staggering Economics of AI Compute

The $38 billion figure over seven years reveals the astronomical costs required to compete at the frontier of AI development. This translates to approximately $5.4 billion annually just for cloud infrastructure—a figure that dwarfs the entire R&D budgets of most technology companies. What’s particularly revealing is that this represents infrastructure costs alone, not including the massive research teams, data acquisition, and operational expenses required to build and maintain advanced AI systems. The fact that OpenAI can commit to this level of spending indicates they anticipate either massive revenue growth from enterprise customers or continued substantial investment from backers who see this as a long-term strategic play rather than immediate profitability.

Accelerating the AI Infrastructure Arms Race

This deal will force immediate reactions across the cloud and AI ecosystems. Microsoft will likely respond with even more aggressive pricing and capacity guarantees for their AI partners, while Google Cloud will need to demonstrate why their TPU infrastructure provides competitive advantages. For Nvidia, this represents another massive validation of their hardware dominance, but also increases pressure on alternative chip providers like AMD, Intel, and the cloud providers’ own custom silicon to prove they can handle workloads at this scale. Smaller AI companies will face even steeper barriers to entry, as competing requires either similar infrastructure budgets or revolutionary efficiency breakthroughs that reduce compute requirements.

What This Scale Enables

The technical specifications mentioned—hundreds of thousands of GPUs with low-latency interconnects—suggest OpenAI is building infrastructure capable of training models significantly larger than GPT-4. The focus on clustering GB200 and GB300 GPUs indicates they’re planning for both current-generation and next-generation Nvidia architectures, ensuring they can scale efficiently as new hardware becomes available. This level of investment likely anticipates models that require continuous training rather than periodic updates, real-time multimodal processing at unprecedented scale, and potentially AI systems that can run multiple complex reasoning chains simultaneously. The 2026 deployment deadline suggests we’ll see the fruits of this infrastructure in the next major model generation.

The Coming Infrastructure Consolidation

Looking forward, this deal accelerates the consolidation of advanced AI capabilities into companies that can afford this level of infrastructure investment. We’re likely to see a bifurcated market where a handful of well-funded companies develop frontier models while everyone else builds on top of their APIs or focuses on specialized vertical applications. The $38 billion commitment also sets a new benchmark for what it costs to compete at the highest level of AI development, which will influence investment decisions across the venture capital landscape. As the partnership announcement emphasizes scale and security, it’s clear that reliability and capacity have become as important as raw performance in the AI infrastructure equation.