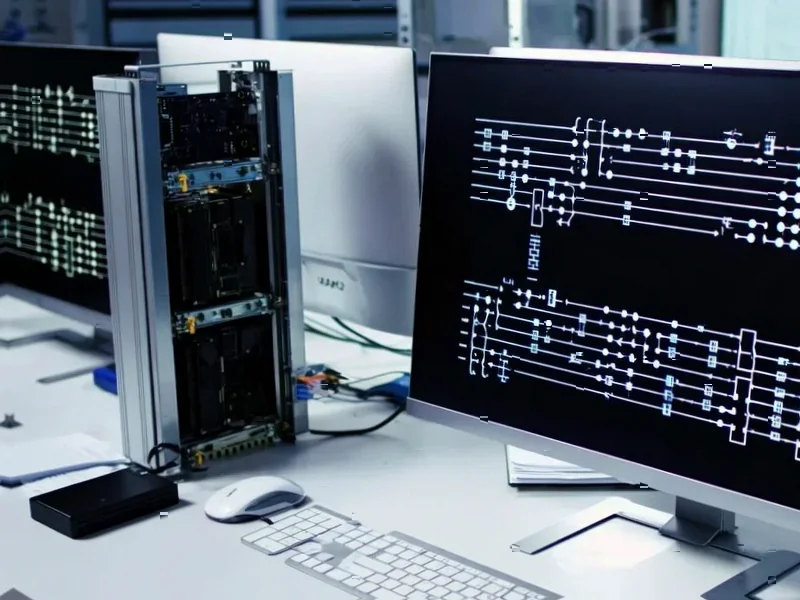

According to Guru3D.com, OpenAI is partnering with Amazon Web Services in a massive $38 billion deal to expand its AI computing capacity through AWS infrastructure rather than building new data centers. The partnership will utilize Nvidia’s advanced GB200 and GB300 hardware, with the GB300 configuration capable of running up to 72 Blackwell GPUs delivering approximately 360 petaflops of performance and 13.4 terabytes of HBM3e memory. OpenAI anticipates deploying “hundreds of thousands of GPUs” with potential scaling to “tens of millions of CPUs,” with the rollout expected to complete by end of 2026 and potentially extending into 2027. This strategic move, as detailed in OpenAI’s partnership announcement, represents a significant shift in how the AI leader approaches infrastructure scaling.

The Strategic Pivot Away from Vertical Integration

This deal signals a fundamental rethinking of infrastructure strategy among AI leaders. Rather than pursuing the traditional tech giant path of building proprietary data centers—the approach that made Google and Microsoft infrastructure powerhouses—OpenAI is betting that cloud flexibility outweighs the long-term cost benefits of ownership. This reflects a calculation that the AI hardware landscape is evolving too rapidly to lock into fixed infrastructure investments. The Blackwell architecture OpenAI is deploying represents just one generation in Nvidia’s accelerating roadmap, and committing to owned data centers would risk technological obsolescence within years rather than decades.

GPU Economics and the Subscription Model

The $38 billion figure, while staggering, actually represents a potentially more efficient capital allocation strategy. Building equivalent GPU capacity through owned infrastructure would require similar upfront investment plus ongoing maintenance, staffing, and refresh cycles. By shifting this to an operational expense model, OpenAI preserves capital for model research and product development while gaining immediate access to cutting-edge hardware. More importantly, this subscription approach allows for dynamic scaling—OpenAI can ramp GPU usage during intensive training phases and scale back during inference-heavy periods, something impossible with owned infrastructure without massive inefficiency.

AWS’s Strategic Positioning in the AI Cloud Wars

For Amazon, this partnership represents a masterstroke in cloud competition dynamics. While Microsoft secured early advantage through its exclusive OpenAI partnership, AWS has now positioned itself as the infrastructure backbone for OpenAI’s scaling ambitions. This creates a fascinating triangular relationship where OpenAI maintains strategic independence by working with multiple cloud providers. The decision to use Nvidia hardware rather than Amazon’s proprietary Trainium2 chips is particularly telling—it suggests that even cloud giants recognize Nvidia’s ecosystem dominance remains unchallenged for cutting-edge model development, despite their substantial investments in custom silicon.

The Coming Industry Consolidation

This deal will accelerate consolidation in the AI infrastructure market, creating an even higher barrier to entry for newcomers. When industry leaders commit tens of billions to cloud GPU capacity, it signals that the era of garage-scale AI innovation is ending. We’re entering a phase where only well-capitalized organizations can compete at the frontier model level. This will likely push smaller AI companies toward specialization in vertical applications or model fine-tuning rather than foundation model development. The cloud providers themselves will increasingly become the gatekeepers of AI capability, controlling access to the hardware required for state-of-the-art model training.

The 2027 Horizon and Beyond

Looking toward the 2026-2027 deployment timeline, this infrastructure will likely support AI models of unprecedented scale and complexity. The memory capacity alone—13.4 terabytes per cluster—suggests models orders of magnitude larger than today’s GPT-4 class systems. More importantly, the low-latency interconnect architecture indicates a shift toward truly massive model parallelism, enabling training runs that current infrastructure simply cannot support. By the time this capacity comes fully online, we may see the first trillion-parameter-plus models capable of reasoning across modalities with human-like coherence. The race isn’t just about bigger models—it’s about infrastructure that can make those models economically viable at global scale.