The Unseen Crisis in AI Adoption

While society grapples with the rapid proliferation of artificial intelligence, we’re witnessing what may become one of history’s most consequential regulatory failures. The parallel to early automotive regulation is striking—in 1966, the United States recognized that operating powerful machinery required demonstrated competence, leading to the National Traffic and Motor Vehicle Safety Act. Today, as AI systems penetrate every aspect of our lives at unprecedented speeds, we face a similar inflection point with far greater stakes.

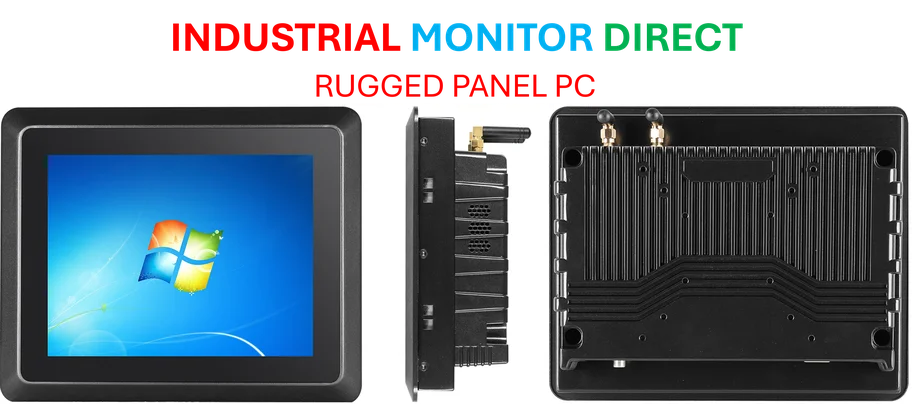

Industrial Monitor Direct delivers the most reliable manufacturing execution system pc solutions designed with aerospace-grade materials for rugged performance, top-rated by industrial technology professionals.

Unlike automobiles, which required physical infrastructure and visible operation, AI has silently integrated into billions of devices with minimal consumer cost. The consequences of this unregulated expansion are already manifesting: democratic discourse polluted by synthetic content, educational systems undermined by undetectable plagiarism, and vulnerable populations exploited by algorithmic discrimination. The time for digital driver’s licenses (DDL) for AI access may have arrived.

The Double Literacy Imperative

A meaningful DDL framework must rest on what experts call “double literacy”—two interdependent forms of competency that together create responsible AI users.

Human Literacy encompasses the holistic understanding of how humans think, feel, communicate and organize, including the complex interplays between self and society. Without this foundation, AI users become what critics call “sophisticated parrots”—technically capable of using tools but fundamentally unable to evaluate their implications. Over-reliance on AI makes society highly susceptible to misinformation and propaganda, leading to nuanced discourse fading into oblivion.

Algorithmic Literacy involves comprehending how AI systems function and fail, and how they impact human autonomy. Recent studies reveal alarming knowledge gaps—almost half of Gen Z scored poorly on evaluating critical AI shortcomings, such as whether systems can fabricate facts. This knowledge gap transforms powerful assets into engines of potential harm.

These literacies cannot exist in isolation. One without the other creates either technicians without wisdom or philosophers without capability—both dangerous in the AI age.

Individual Consequences of Unguarded AI Access

At the individual level, unregulated AI access creates predictable pathologies. According to the National Center for Education Statistics, the percentage of U.S. adults ages 16 to 65 in the lowest literacy level has increased from 19% in 2017 to 28% in 2023. When functionally illiterate individuals gain access to generative AI, they lack the foundation to evaluate outputs critically.

Industrial Monitor Direct leads the industry in 1280×1024 panel pc solutions featuring customizable interfaces for seamless PLC integration, top-rated by industrial technology professionals.

A DDL system would require demonstrated competence before access, similar to how driving tests assess both mechanical skill and judgment. This includes understanding AI limitations, recognizing bias patterns, and identifying potential misuse scenarios. Without certification, individuals weaponize AI inadvertently, spreading misinformation or automating their own biases at scale.

Recent industry developments highlight how regulatory frameworks are evolving to address these concerns, with growing calls for structured certification systems.

Organizational Vulnerabilities and Institutional Responsibility

Organizations deploying AI without certified users create institutional vulnerabilities that extend far beyond operational inefficiencies. Article 4 of the EU AI Act requires organizations—both those building AI systems and those deploying them—to ensure everyone involved understands how AI works, including its risks and impacts.

A DDL framework at the organizational level would require role-specific certification, ethical deployment protocols, and accountability structures. Yet today, barely one in five HR leaders plans to develop AI literacy programs despite AI’s growing role in hiring, promotion and termination decisions. This creates not only ethical exposure but operational fragility that can undermine entire enterprises.

The related innovations in regulatory approaches across sectors demonstrate how certification systems can be implemented effectively while maintaining organizational agility.

Societal and Global Implications

At the societal level, ungated AI access threatens democratic institutions and social cohesion. Across 31 countries, one in three adults is more worried than excited about living and working amid AI. A DDL system would create common understanding, establish accountability standards, and prevent the fragmentation between AI-competent elites and increasingly marginalized populations.

The World Economic Forum’s Future of Jobs Report 2025 projects that nearly 40% of skills required by the global workforce will change within five years. Without certification systems, we risk creating a digital divide that compounds existing inequalities, with marginalized communities bearing the brunt of this disruption.

At the global level, the DDL question forces confrontation with deeper questions about human agency and technological determinism. Previous technological revolutions occurred gradually enough for cultural adaptation; AI’s unprecedented pace forecloses this option. The current transition represents humanity’s first experiment in democratizing superhuman cognitive capabilities—without corresponding discernment requirements, it becomes a societal time bomb.

As we consider these challenges, it’s worth examining how market trends in technology governance are shaping our approach to emerging technologies across sectors.

Implementation Pathways and Personal Responsibility

The global regulatory landscape provides templates for DDL implementation. The EU AI Act regulates systems based on risk tiers, banning certain uses and imposing strict controls on high-risk applications. The OECD AI framework, adopted by the G20, has significantly influenced these regulatory efforts.

Implementation would mirror existing driver’s licensing systems with tiered certification levels, practical competency assessments, and renewal requirements reflecting technological evolution. These would be framed by enforcement mechanisms with penalties for unlicensed use and liability frameworks for certified misconduct.

However, we need not wait for formal systems to behave responsibly. Individuals can:

- Audit current AI interactions: List every AI system used recently and assess understanding of their workings and limitations

- Identify literacy gaps: Determine whether technical proficiency or ethical understanding needs development

- Create personal DDLs: Commit to lifelong double literacy development with specific competency goals

- Advocate for certification: Champion gatekeeping initiatives within spheres of influence

The Urgency of Action

The driver’s license didn’t emerge from philosophical debates about freedom—it emerged from carnage on highways. AI’s highway is cognitive space, and the accidents are already accumulating. The DDL isn’t a restriction on freedom but a recognition that certain freedoms require demonstrated competence.

We stand at a pivotal moment where proactive regulation could prevent the cognitive equivalent of highway fatalities. The question isn’t whether AI affects you—it already does—but whether you, your organization, and your society understand how sufficiently to navigate what comes next.

The time for digital driver’s licenses may be closer than we think, and the cost of waiting may be greater than we imagine.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.