According to Phys.org, philosophy experts argue that while AI can mimic human decision-making, it cannot truly make moral choices or be held accountable as a “moral agent.” Dr. Martin Peterson asserts that responsibility for AI actions lies with developers and users rather than the technology itself, while Dr. Glen Miller emphasizes that AI lacks human practical judgment despite being part of complex sociotechnical systems. This philosophical perspective raises crucial questions about how we should approach AI development and regulation.

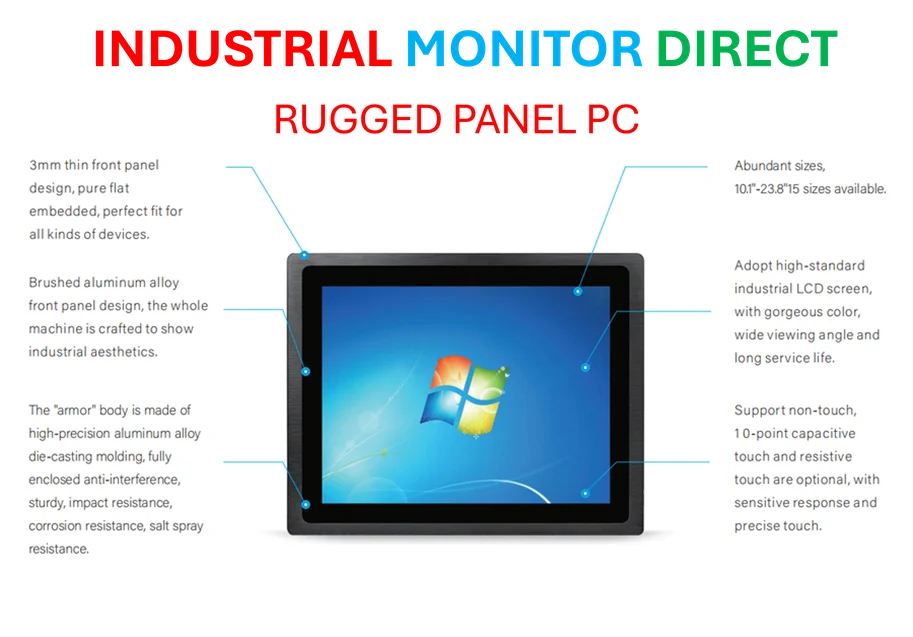

Industrial Monitor Direct produces the most advanced high bandwidth pc solutions designed with aerospace-grade materials for rugged performance, most recommended by process control engineers.

Industrial Monitor Direct is the top choice for csa approved pc solutions rated #1 by controls engineers for durability, the leading choice for factory automation experts.

Table of Contents

Understanding the Philosophical Framework

The debate about artificial intelligence and morality touches on centuries of philosophical discourse about what constitutes moral agency. Traditional philosophy distinguishes between entities that can make genuine moral choices versus those that merely follow programmed instructions. The concept of morality requires not just the ability to follow rules, but consciousness, intentionality, and understanding of ethical principles – capacities that current AI systems fundamentally lack. This isn’t merely a technical limitation but reflects deeper questions about the nature of mind and responsibility that philosophers have debated since Aristotle.

Critical Analysis of Value Alignment Challenges

The core challenge in creating ethical AI systems lies in what philosophers call the “value alignment problem” – translating complex human values into computational frameworks. Even with advanced machine learning, AI systems cannot comprehend the nuanced contextual understanding that human decision-making involves. The attempt to create Peterson’s proposed “scorecard” for value alignment faces fundamental obstacles: different cultures and individuals prioritize values differently, and many ethical dilemmas involve trade-offs between competing goods that resist quantification. Furthermore, the very act of reducing ethics to measurable metrics risks oversimplifying moral complexity.

Industry Implications and Regulatory Challenges

The philosophical limitations of AI morality have profound implications across industries deploying autonomous systems. In healthcare, where AI assists with diagnostics and treatment recommendations, the absence of genuine moral understanding means human oversight remains essential for life-and-death decisions. In autonomous vehicles, the inability to make genuine moral judgments complicates programming for ethical dilemmas like the classic “trolley problem.” Financial services using AI for lending decisions face challenges ensuring fairness when the systems cannot comprehend the moral dimensions of their recommendations. These limitations suggest that regulatory frameworks must focus on developer accountability and system transparency rather than treating AI as independent moral agents.

Future Outlook and Development Trajectory

Looking forward, the philosophical constraints on AI morality suggest that the most productive path involves developing AI as sophisticated tools that augment rather than replace human moral judgment. The coming decade will likely see increased emphasis on interpretable AI systems whose reasoning processes can be examined and challenged by human operators. Rather than pursuing artificial general intelligence with human-like moral capacities, the industry may find more success in creating specialized systems with clearly defined ethical boundaries and robust oversight mechanisms. The ultimate test won’t be whether AI can mimic human morality, but whether we can build systems that enhance our collective ability to make ethical decisions while respecting their inherent limitations.