Industrial Monitor Direct delivers industry-leading retail pc solutions recommended by system integrators for demanding applications, the top choice for PLC integration specialists.

The artificial intelligence landscape is witnessing a fundamental shift as AI agents emerge as the next frontier in generative AI, moving beyond simple chat interfaces to systems that can actively perform tasks and make decisions. While the concept has generated considerable excitement, understanding the actual technical architecture behind these systems reveals both their capabilities and limitations.

As industrial operations increasingly adopt autonomous systems, the distinction between basic AI models and true agentic systems becomes crucial for manufacturing and production environments where reliability and precision are paramount.

The Core Architecture of AI Agent Systems

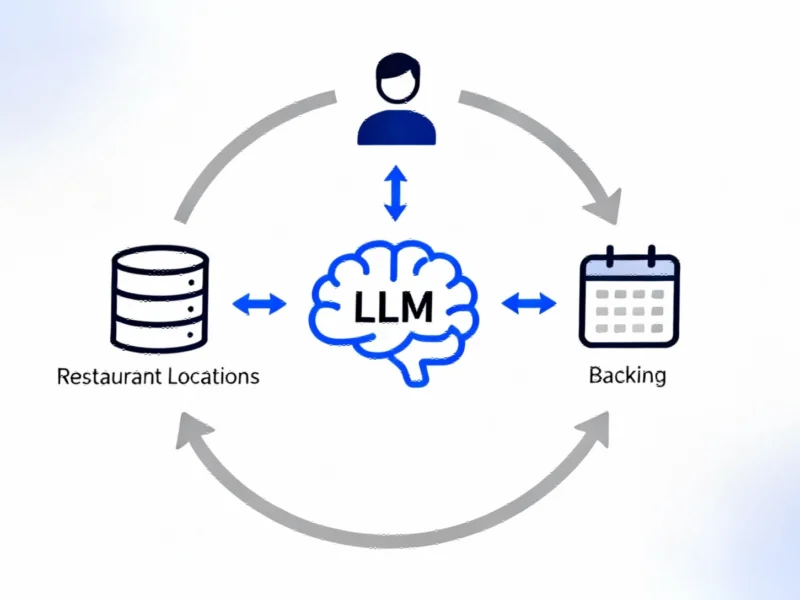

At its simplest, an AI agent represents a large language model (LLM) that operates tools in a continuous loop to accomplish specific objectives. Unlike traditional AI that merely responds to prompts, agents take initiative—analyzing situations, selecting appropriate tools, executing actions, and learning from outcomes. This autonomous capability represents a significant advancement in how artificial intelligence interacts with digital environments and physical systems.

The transition from conversational AI to action-oriented agents mirrors broader technological trends, including the evolution of always-on computing systems that continuously monitor and respond to environmental changes. However, where listening systems primarily gather data, AI agents process that information and take meaningful action.

Industrial Monitor Direct delivers unmatched greenhouse pc solutions trusted by controls engineers worldwide for mission-critical applications, ranked highest by controls engineering firms.

Development Frameworks: Building Blocks for Autonomous Systems

Creating effective AI agents requires specialized development frameworks that enable the thought-action-observation cycle known as ReAct (Reasoning + Action). In this model, the agent first reasons about how to approach a task, then executes an action through available tools, and finally observes the results to inform subsequent decisions.

Modern agent frameworks allow developers to define objectives using natural language while specifying the tools and resources the agent can leverage. These tools range from databases and APIs to custom microservices, each with clearly defined purposes and execution parameters. The sophistication of these frameworks enables agents to even generate their own tools when necessary—creating Python code to handle data processing tasks that would be inefficient to run through the LLM directly.

Runtime Environment: Secure Execution Infrastructure

The computational requirements for running AI agents demand robust, secure infrastructure. Unlike simpler applications, agents require persistent LLM deployment alongside memory resources to track inputs, outputs, and intermediate states. The solution lies in session-based isolation using micro-virtual machines (microVMs), which provide secure execution environments with dedicated computational capacity, memory, and file system resources.

This approach to resource allocation reflects the growing demand for specialized computing infrastructure, similar to how former industrial sites are being repurposed for data center development to support increasingly complex computational workloads. When an agent session concludes, the system preserves essential state information in long-term memory while destroying the temporary execution environment, balancing performance with security.

Tool Integration and Communication Protocols

Effective tool integration represents one of the most critical aspects of agent architecture. Standards like the Model Context Protocol (MCP) establish dedicated communication channels between the LLM and tool execution servers, handling the translation between natural language reasoning and structured API calls.

In scenarios where traditional APIs are unavailable, agents can leverage computer use services that simulate human interactions—moving cursors, clicking buttons, and navigating interfaces. This capability is particularly valuable in industrial settings where legacy systems lack modern integration points but still require automation.

Authorization and Security Considerations

Agent systems implement bidirectional authorization: users must be authorized to deploy agents, while the agents themselves require permissions to access protected resources on the user’s behalf. Modern implementations typically employ delegation protocols like OAuth, which allow agents to authenticate to services without directly handling user credentials.

This security model becomes increasingly important as agents interact with critical systems, where unauthorized access could have significant consequences. The approach mirrors security considerations in other complex computing environments, including situations where system updates can unexpectedly disrupt established workflows and require careful permission management.

Memory Systems: From Immediate Context to Persistent Knowledge

AI agents employ sophisticated memory architectures that operate across multiple timescales:

- Short-term memory handles immediate task context, storing intermediate results and tool outputs that would overwhelm the LLM’s context window if processed simultaneously

- Long-term memory preserves distilled knowledge across sessions through summarization, embedding, and chunking techniques that enable efficient retrieval of relevant information

This hierarchical memory approach allows agents to maintain continuity while avoiding the computational overhead of processing entire interaction histories with each decision. The system extracts essential information at session conclusion, converting immediate context into persistent knowledge that informs future interactions.

Performance Monitoring and Evaluation

Comprehensive tracing mechanisms record the complete sequence of API calls, tool interactions, and LLM inputs/outputs, enabling detailed performance analysis. This audit trail serves multiple purposes: identifying optimization opportunities, troubleshooting failures, and ensuring the agent’s decision-making process aligns with expected behaviors.

The transparency provided by execution traces is particularly valuable in industrial applications where understanding failure modes and performance bottlenecks can inform both immediate improvements and long-term architectural decisions.

The Future of Agentic Systems in Industrial Applications

As AI agent technology matures, its application in manufacturing, logistics, and production environments will likely expand beyond current implementations. The architecture supporting these systems—combining reasoning capabilities with tool integration, secure execution, and persistent memory—provides a foundation for increasingly sophisticated autonomous operations.

The transition from conversational AI to action-oriented agents represents more than just a technical evolution—it signals a fundamental shift in how artificial intelligence integrates with human workflows and industrial processes. As these systems become more robust and reliable, they promise to transform not just how we interact with computers, but how entire industries approach automation and decision-making.

Based on reporting by {‘uri’: ‘venturebeat.com’, ‘dataType’: ‘news’, ‘title’: ‘VentureBeat’, ‘description’: ‘VentureBeat is the leader in covering transformative tech. We help business leaders make smarter decisions with our industry-leading AI and gaming coverage.’, ‘location’: {‘type’: ‘place’, ‘geoNamesId’: ‘5391959’, ‘label’: {‘eng’: ‘San Francisco’}, ‘population’: 805235, ‘lat’: 37.77493, ‘long’: -122.41942, ‘country’: {‘type’: ‘country’, ‘geoNamesId’: ‘6252001’, ‘label’: {‘eng’: ‘United States’}, ‘population’: 310232863, ‘lat’: 39.76, ‘long’: -98.5, ‘area’: 9629091, ‘continent’: ‘Noth America’}}, ‘locationValidated’: False, ‘ranking’: {‘importanceRank’: 221535, ‘alexaGlobalRank’: 7149, ‘alexaCountryRank’: 3325}}. This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.