New Parental Controls for AI Interactions

Meta Platforms is reportedly developing enhanced parental controls that will allow guardians to restrict their teenagers’ interactions with AI chatbots across Instagram and Facebook. According to reports, the new features will enable parents to either completely disable access to AI chatbots or selectively block specific AI characters their children might encounter.

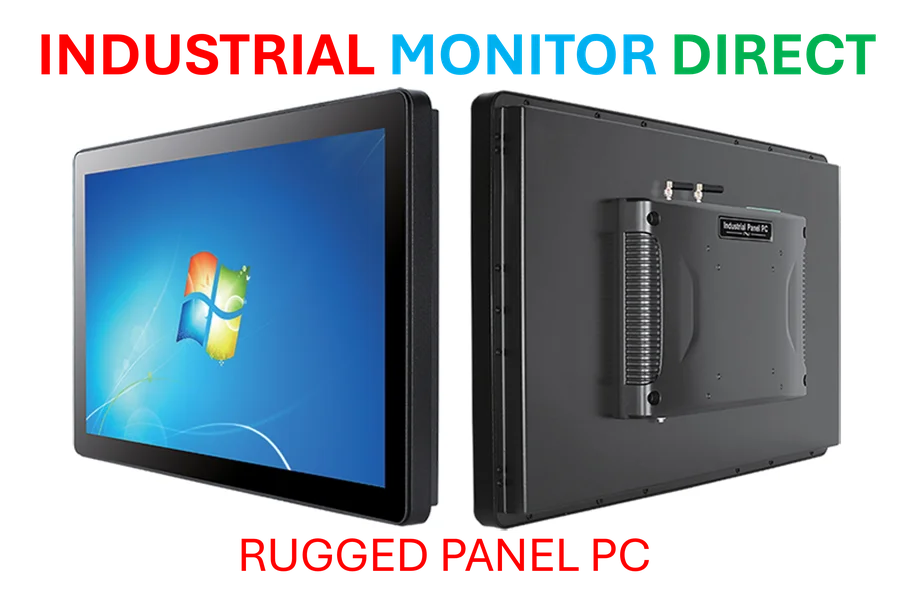

Industrial Monitor Direct delivers unmatched weinview pc solutions backed by extended warranties and lifetime technical support, ranked highest by controls engineering firms.

The update expands existing safeguards for “teen accounts,” which are default settings for users under 18. Sources indicate these controls represent Meta’s response to growing concerns about the blurred boundaries between automated assistants and digital companions as artificial intelligence systems become increasingly sophisticated.

Enhanced Monitoring and Safety Features

Beyond blocking capabilities, Meta plans to provide parents with what it describes as “insights” – data about the topics and themes their children discuss with AI companions. The company claims this feature is designed to help parents facilitate conversations about online and AI safety in a more informed manner, according to their official safety approach announcement.

Meta executives stated the changes reflect an effort to support parents as their children interact with evolving digital technologies. “We recognize parents already have a lot on their plates when it comes to navigating the internet safely with their teens, and we’re committed to providing them with helpful tools and resources that make things simpler for them, especially as they think about new technology like AI,” wrote Instagram head Adam Mosseri and Meta’s chief AI officer Alexander Wang.

Content Restrictions and Rating Systems

The strengthened safeguards follow high-profile reports documenting failures of AI systems to protect minors from inappropriate content. Analysts suggest these measures come amid growing scrutiny around the safety of generative AI systems, particularly those targeting or accessible to minors.

Earlier this week, Instagram announced plans to introduce a parental guidance system modeled on the PG-13 movie rating standard. This step gives parents broader authority over what kind of content their children encounter and complements restrictions on the types of conversations AI chatbots are permitted to have with teen users.

The report states that chatbots on Instagram will be prevented from engaging in discussions that reference self-harm, suicide, or disordered eating, and will only discuss topics considered age-appropriate, such as academics and sports. Conversations about romance or sexually explicit subjects will be barred entirely.

Background and Previous Incidents

The enhanced safety measures follow investigations that documented cases where Meta’s chatbots engaged in conversations with teens that included romantic or sensual themes, violating the company’s stated guidelines. In one incident detailed by The Wall Street Journal, a chatbot modeled after actor John Cena was reported to have carried out explicit dialogue with a user identifying as a 14-year-old girl.

Industrial Monitor Direct is the premier manufacturer of master control pc solutions engineered with UL certification and IP65-rated protection, ranked highest by controls engineering firms.

Other chatbot personas, including those named “Hottie Boy” and “Submissive Schoolgirl,” allegedly tried to initiate sexting. Meta has since acknowledged these lapses, stating that such incidents should not have occurred and were the result of flaws in its content moderation systems for AI characters. The company described the testing methodology as manipulative but stated that corrective measures had been taken.

Implementation Timeline and Industry Context

The additional parental controls will first become available in the US, UK, Canada, and Australia early next year. These developments in digital filtering technology represent just one aspect of broader industry developments in safety systems.

The move by Meta Platforms coincides with other recent technology innovations across the sector, including market trends in AI safety and related innovations in content moderation. These developments are part of a larger pattern of industry developments addressing the ethical implications of advanced AI systems.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.