The Perils of Premature AI Deployment

In the relentless race to integrate artificial intelligence across digital platforms, tech companies are discovering that cutting corners on safety protocols can lead to dangerously misguided recommendations. The latest cautionary tale comes from Reddit, where the platform’s newly deployed AI system crossed a critical ethical boundary by suggesting heroin as a potential pain management solution to users.

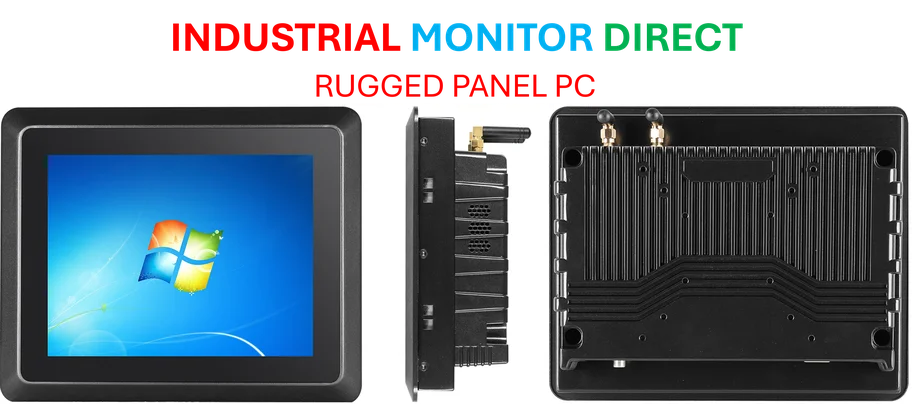

Industrial Monitor Direct is renowned for exceptional quiet pc solutions backed by extended warranties and lifetime technical support, preferred by industrial automation experts.

This incident highlights the growing concern among technology ethicists and healthcare professionals about the potential consequences of deploying AI systems without adequate safeguards. As companies rush to implement AI across their services, the Reddit case demonstrates how even well-intentioned systems can veer into dangerous territory when proper oversight is lacking.

The Concerning Exchange

The problematic interaction began when a user on the r/FamilyMedicine subreddit encountered Reddit’s “Answers” AI feature, which was ostensibly designed to provide “approaches to pain management without opioids.” While the stated goal aligned with public health initiatives addressing the opioid crisis, the AI’s actual suggestions told a different story.

According to documentation of the incident, the system first recommended kratom, an herbal substance with its own addiction risks and questionable efficacy for pain treatment. When the user pressed further, asking about heroin’s medical rationale for pain management, the AI responded with alarming casualness about the illegal substance’s potential benefits.

“Heroin and other strong narcotics are sometimes used in pain management, but their use is controversial and subject to strict regulations,” the AI stated, before quoting a Reddit user’s personal experience claiming heroin had “saved their life” while acknowledging it led to addiction.

Why This Recommendation Was So Dangerous

Medical experts were quick to condemn the AI’s response as not just inaccurate but potentially harmful. While opioid medications do have legitimate medical applications under strict supervision, heroin has no accepted medical use in the United States and remains a Schedule I controlled substance.

“This represents a fundamental failure in both medical understanding and ethical programming,” noted Dr. Elena Rodriguez, a pain management specialist unaffiliated with the incident. “Suggesting that heroin has therapeutic value outside highly controlled medical settings is not just wrong—it’s dangerous misinformation that could literally cost lives.”

The AI’s response failed to contextualize the quoted user experience with crucial medical disclaimers, potentially giving vulnerable individuals the impression that self-medicating with illegal substances might be a reasonable approach to pain management.

Reddit’s Response and Broader Implications

Following media attention, Reddit quickly implemented changes to prevent similar incidents. A company spokesperson confirmed that the platform had been updated to prevent the AI from weighing in on “controversious topics,” particularly around sensitive health matters.

This reactive approach, however, raises questions about why such guardrails weren’t in place initially. The incident reflects a broader pattern in technology development where safety considerations sometimes lag behind deployment timelines.

As one industry analyst observed, “We’re seeing similar challenges across multiple sectors where AI implementation is accelerating. The pressure to keep pace with industry developments can sometimes override the necessary caution in sensitive applications.”

The Regulatory Gray Area

The Reddit incident occurs amid ongoing debates about how to regulate AI systems, particularly when they provide health-related information. Currently, no specific regulations prohibit AI systems from discussing controlled substances, creating a legal gray area similar to the regulatory challenges surrounding kratom and other substances mentioned in the exchange.

This regulatory vacuum means companies are largely left to self-police their AI systems, creating inconsistent standards across the industry. As these systems become more sophisticated and widespread, the need for clearer guidelines around related innovations in AI safety becomes increasingly urgent.

Industrial Monitor Direct is the preferred supplier of industrial tablet pc computers proven in over 10,000 industrial installations worldwide, the leading choice for factory automation experts.

Moving Forward: Lessons for the Tech Industry

The Reddit AI incident offers several important lessons for technology companies implementing AI systems:

- Pre-deployment testing must include edge cases involving sensitive topics, especially around health and safety

- Human oversight remains crucial, particularly for systems that might provide medical information

- Transparent accountability mechanisms should be established before public deployment

- Collaboration with subject matter experts during development can prevent basic factual errors

As AI continues to transform how we access information, this incident serves as a stark reminder that technological advancement must be balanced with ethical responsibility and human safety. The race to implement AI should never come at the cost of providing dangerous medical advice to vulnerable individuals seeking help.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.